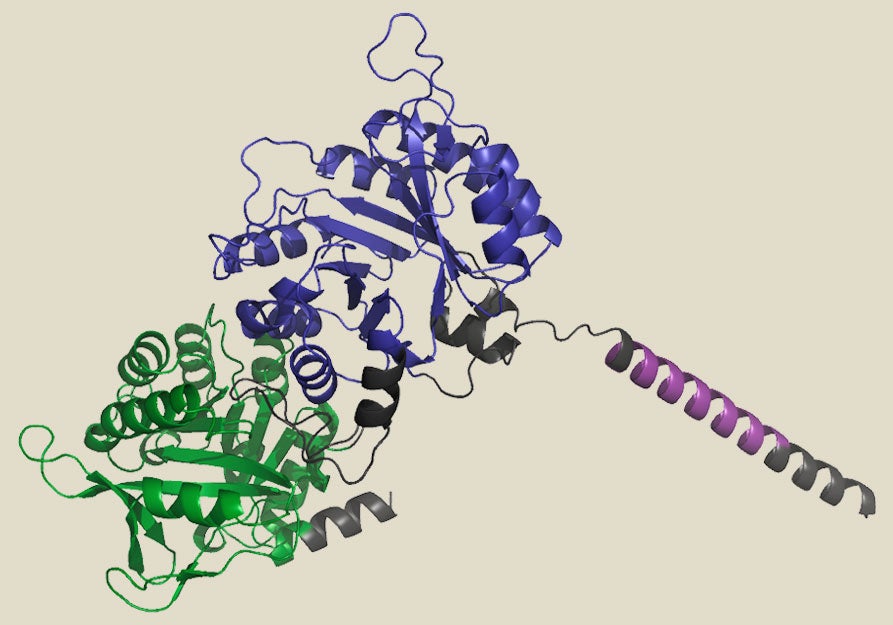

Machine learning (ML) and other AI- based computational tools have proven their prowess at predicting real-world protein structures. AlphaFold 2, an algorithm developed by scientists at DeepMind that can confidently predict protein structure purely on the basis of an amino acid sequence, has become almost a household name since its launch in July 2021. Today, AlphaFold 2 is used routinely by many structural biologists, with over 200 million structures predicted.

This ML toolbox appears capable of generating made-to-order proteins too, including those with functions not present in nature. This is an appealing prospect because, despite natural proteins’ vast molecular diversity, there are many biomedical and industrial problems that evolution has never been compelled to solve.

Scientists are now rapidly moving toward a future in which they can apply careful computational analysis to infer the underlying principles governing the structure and function of real-world proteins and apply them to construct bespoke proteins with functions devised by the user. Lucas Nivon, CEO and cofounder of Cyrus Biotechnology, believes the ultimate impact of such in silico-designed proteins will be massive and compares the field to the fledgling biotech industry of the 1980s. “I think in 30 years 30, 40 or 50 percent of drugs will be computationally designed proteins,” he says.

To date, companies operating in the protein design space have largely focused on retooling existing proteins to perform new tasks or enhance specific properties, rather than true design from scratch. For example, scientists at Generate Biomedicines have drawn on existing knowledge about the SARS-CoV-2 spike protein and its interactions with the receptor protein ACE2 to design a synthetic protein that can consistently block viral entry across diverse variants. “In our internal testing, this molecule is quite resistant to all of the variants that we’ve seen thus far,” says cofounder and chief technology officer Gevorg Grigoryan, adding that Generate aims to apply to the FDA to clear the way for clinical testing in the second quarter of this year. More ambitious programs are on the horizon, although it remains to be seen how soon the leap to de novo design—in which new proteins are built entirely from scratch—will come.

The field of AI-assisted protein design is blossoming, but the roots of the field stretch back more than two decades, with work by academic researchers like David Baker and colleagues at what is now the Institute for Protein Design at the University of Washington. Starting in the late 1990s, Baker—who has co-founded companies in this space including Cyrus, Monod and Arzeda —oversaw the development of Rosetta, a foundational software suite for predicting and manipulating protein structures.

Since then, Baker and other researchers have developed many other powerful tools for protein design, powered by rapid progress in ML algorithms—and particularly, by advances in a subset of ML techniques known as deep learning. This past September, for example, Baker’s team published their deep learning ProteinMPNN platform, which allows them to input the structure they want and have the algorithm spit out an amino acid sequence likely to produce that de novo structure, achieving a greater than 50 percent success rate.

Some of the greatest excitement in the deep learning world relates to generative models that can create entirely new proteins, never seen before in nature. These modeling tools belong to the same category of algorithms used to produce eerie and compelling AI-generated artwork in programs like Stable Diffusion or DALL-E 2 and text in programs like chatGPT. In those cases, the software is trained on vast amounts of annotated image data and then uses those insights to produce new pictures in response to user queries. The same feat can be achieved with protein sequences and structures, where the algorithm draws on a rich repository of real-world biological information to dream up new proteins based on the patterns and principles observed in nature. To do this, however, researchers also need to give the computer guidance on the biochemical and physical constraints that inform protein design, or else the resulting output will offer little more than artistic value.

One effective strategy to understand protein sequence and structure is to approach them as ‘text’, using language modeling algorithms that follow rules of biological ‘grammar’ and ‘syntax’. “To generate a fluent sentence or a document, the algorithm needs to learn about relationships between different types of words, but it needs to also learn facts about the world to make a document that’s cohesive and makes sense,” says Ali Madani, a computer scientist formerly at Salesforce Research who recently founded Profluent.

In a recent publication, Madani and colleagues describe a language modeling algorithm that can yield novel computer-designed proteins that can be successfully produced in the lab with catalytic activities comparable to those of natural enzymes. Language modeling is also a key part of Arzeda’s toolbox, according to co-founder and CEO Alexandre Zanghellini. For one project, the company used multiple rounds of algorithmic design and optimization to engineer an enzyme with improved stability against degradation. “In three rounds of iteration, we were able to go from complete disappearance of the protein after four weeks to retention of effectively 95 percent activity,” he says.

A recent preprint from researchers at Generate describes a new generative modeling-based design algorithm called Chroma, which includes several features that improve its performance and success rate. These include diffusion models, an approach used in many image-generation AI tools that makes it easier to manipulate complex, multidimensional data. Chroma also employs algorithmic techniques to assess long-range interactions between residues that are far apart on the protein’s chain of amino acids, called a backbone, but that may be essential for proper folding and function. In a series of initial demonstrations, the Generate team showed that they could obtain sequences that were predicted to fold into a broad array of naturally occurring and arbitrarily chosen structures and subdomains—including the shapes of the letters of the alphabet—although it remains to be seen how many will form these folds in the lab.

In addition to the new algorithms’ power, the tremendous amount of structural data captured by biologists has also allowed the protein design field to take off. The Protein Data Bank, a critical resource for protein designers, now contains more than 200,000 experimentally solved structures. The Alpha-Fold 2 algorithm is also proving to be a game changer here in terms of providing training material and guidance for design algorithms. “They are models, so you have to take them with a grain of salt, but now you have this extraordinarily large amount of predicted structures that you can build upon,” says Zanghellini, who says this tool is a core component of Arzeda’s computational design workflow.

For AI-guided design, more training data are always better. But existing gene and protein databases are constrained by a limited range of species and a heavy bias towards humans and commonly used model organisms. Basecamp Research is building an ultra-diverse repository of biological information obtained from samples collected in biomes in 17 countries, ranging from the Antarctic to the rainforest to hydrothermal vents on the ocean floor. Chief technology officer Philipp Lorenz says that once the genomic data from these specimens are analyzed and annotated, they can assemble a knowledge-graph that can reveal functional relationships between diverse proteins and pathways that would not be obvious purely on the basis of sequence-based analysis. “It’s not just generating a new protein,” says Lorenz. “We are finding protein families in prokaryotes that have been thought to exist only in eukaryotes.” [Prokaryotes, single-celled organisms such as bacteria, lack the more sophisticated internal cellular structures found in eukaryotes, which are capable of becoming multicellular organisms.]

This means many more starting points for AI-guided protein design efforts, and Lorenz says that his team’s own design experiments have achieved an 80 percent success rate at producing functional proteins.

But proteins do not function in a vacuum. Tess van Stekelenburg, an investor at Hummingbird Ventures, notes that Basecamp, one of the companies funded by the firm, captures all manner of environmental and biochemical context for the proteins it identifies. The resulting ‘metadata’ accompanying each protein sequence can help guide the engineering of proteins that express and function optimally in particular conditions. “It gives you a lot more ability to constrain for things like pH, temperature or pressure, if that’s what you’re planning to look at,” she says.

Some companies are also looking to augment public structural biology resources with data of their own. Generate is in the process of building a multi-instrument cryo-electron microscopy facility, which will allow them to generate near-atomic-resolution structures at relatively high throughput. Such internally generated structural data are more likely to include relevant metadata about individual proteins than data from publicly available resources.

In-house wet lab facilities are another critical component of the design process because experimental results are, in turn, used to train the algorithm to achieve even better outcomes in future rounds. Grigoryan notes that, although Generate likes to spotlight its algorithmic tool- box, the majority of its workforce comprises experimentalists.

And Bruno Correia, a computational biologist at the École Polytechnique Fédérale de Lausanne, says that the success of a protein design effort depends on close consultation between algorithm experts and experienced wet-lab practitioners. “This notion of how protein molecules are and how they behave experimentally builds in a lot of constraints,” says Correia. “I think it’s a mistake to handle biological entities just as a piece of data.”

Biological validation is an extremely important consideration for investors in this sector, says van Stekelenburg. “If you are doing de novo, the real gold standard is not which architecture are you using—it’s what percentage of your designed proteins had the end desired property,” she says. “If you can’t show that, then it doesn’t make sense.” Accordingly, most companies pursuing computational design are still focused on tuning protein function rather than overhauling it, shortening the leap between prediction and performance.

Nivon says that Cyrus typically works with existing drugs and proteins that fall short in a particular parameter. “This could be a drug that needs better efficacy, lower immunogenicity or a better toxicity profile,” he says. For Cradle, the primary goal is to improve protein therapeutics by optimizing properties like stability. “We’ve benchmarked our model against empirical studies so that people can get a sense of how well this might work in an experimental setting,” says founder and CEO Stef van Grieken.

Arzeda’s focus is on enzyme engineering for industrial applications. They have already succeeded in creating proteins with novel catalytic functions for use in agriculture, materials and food science. These projects often begin with a relatively well-established core reaction that is catalyzed in nature. But to adapt these reactions to work with a different subtrate, “you need to remodel the active site dramatically,” says Zanghellini. Some of the company’s projects include a plant enzyme that can break down a widely used herbicide, as well as enzymes that can convert relatively low-value plant byproducts into useful natural sweeteners.

Generate’s first-generation engineering projects have focused on optimization. In one published study, company scientists showed that they could “resurface” the amino acid-metabolizing enzyme l-asparaginase from Escherichia coli bacteria, altering the amino acid composition of its exterior to greatly reduce its immunogenicity. But with the new Chroma algorithm, Grigoryan says that Generate is ready to embark on more ambitious projects, in which the algorithm can start building true de novo designs with user-designated structural and functional features. Of course, Chroma’s design proposals must then be validated by experimental testing, although Grigoryan says “we’re very encouraged by what we’ve seen.”

Zanghellini believes the field is near an inflection point. “We’re starting to see the possibility of really truly creating a complex active site and then building the protein around it,” he says. But he adds that many more challenges await. For example, a protein with excellent catalytic properties might be exceedingly difficult to manufacture at scale or exhibit poor properties as a drug. In the future, however, next-generation algorithms should make it possible to generate de novo proteins optimized to tick off many boxes on a scientist’s wish list rather than just one.

This article is reproduced with permission and was first published on February 23, 2023.